Estimating the 2024 Costs of Developing Artificial Intelligence Software

As generative AI capabilities continue to advance across various industries, organizational leaders face the challenge of integrating these functionalities for both internal and external users. Similar to other technologies, generative AI technology comes in various forms, provided by multiple vendors, with deep and wide tech stacks. Depending on their specific use cases, leaders have the option to:

- Buy: Adopt a SaaS model and subscribe to API services on a pay-as-you-go basis.

- Build: Develop a solution from the ground up.

- Neither: Utilize existing models and enhance them to suit their needs.

In this post, we explore various mental models to consider when making decisions about building or buying foundation models. Additionally, we provide high-level cost estimates for operating in each of these areas. When contemplating whether to build or buy foundation models, take into account the following factors:

- Time-to-Market: Assess how quickly you need the functionality deployed to production. Are there urgent timelines set by your CEO and board, demanding readiness in the next week or month?

- Skills: Evaluate whether your team possesses the necessary scientific skills or if you can quickly hire or outsource them. While you don’t need a large team, having a few skilled individuals is crucial. BloombergGPT and Falcon LLMs, for instance, had teams of fewer than 10 people, including leaders.

- Budget: Determine the financial resources you are willing to allocate. Consider whether you prefer operational expenses (opex) or capital expenses (capex).

- Intellectual Property (IP): Decide if owning the intellectual property of the foundation model is a priority for your organization.

- Security and Privacy: Consider the sensitivity of your data and whether you are comfortable exposing it to third-party model providers.

Buy

Before opting for a subscription pay-as-you-go model for generative AI operations, it’s crucial to be aware of potential privacy concerns. When utilizing third-party services for tasks like summarization or text generation, you often need to send information, such as your organization’s data, in the form of a prompt to the provider. Not all providers automatically keep your data private; some may use the data for re-training their foundation models. Enterprises should exercise caution to avoid exposing proprietary information.

As a biased side note, Amazon Bedrock stands out by not using customer data to train models, ensuring isolation per customer and maintaining data privacy and security.

Assuming security considerations are addressed, let’s explore how the subscription model works. It operates based on the tasks you aim to perform. For instance, if your users want to summarize a lengthy article, you can create a mechanism for them to send the article to the model or service. Within seconds, the model responds with a summarized version of the article. Let’s consider a sample article with 500 words. When we submit this article to the AI service for summarization, the response we receive is a concise and summarized version, distilling the essential information into 91 words. This process allows users to quickly obtain key insights without having to go through the entire article

Prompt and response

In performing the task of summarization, the cost is remarkably low, at $0.00128, which is less than a penny. This calculation is based on using OpenAI’s GPT-3.5 Turbo model with a context of 4,000 tokens. It’s important to note that model providers use token-based pricing, where 750 words in English are approximately equivalent to 1,000 tokens. In our example, the prompt, which is 500 words (or 750 tokens), costs $0.00105, and the response, which is 91 words (or 125 tokens), costs $0.0002275. These costs demonstrate the affordability of leveraging AI services for specific tasks.

Adopting foundation models in a consume-as-a-service mode can drastically cut down time-to-market, facilitating production deployment within weeks instead of months. However, it’s crucial to bear in mind that as use cases multiply, relying on external services for licensing will result in ongoing costs over time.

Build:

Now, let’s delve into the scenario of building a foundation model. Constructing or training large-scale foundation models from scratch, or pre-training them, demands vast amounts of data, computing power, and financial resources. When assessing the cost of building or pre-training a model, various factors come into play:

- Fixed price (to train the model),

- Variable price (to serve the model),

- Time-value of skilled scientists and engineers to build and evaluate such models,

- The ability to process the data or acquire the data.

Fixed Price

Several large models trained in recent history provide insights into pre-training costs. For instance, Google’s PaLM model training cost was estimated to be around $12 million, albeit over a year ago. The landscape of Large Language Models (LLMs) is evolving rapidly, with advancements in hardware, research, and more efficient training methods likely leading to reduced time and costs for pre-training models.

In a more recent development, the META AI team, in the LLaMA 2 paper (July 2023), revealed that they trained multiple models (7B, 13B, 34B, and 70B) totaling 3.3 million GPU hours. Their largest model, the 70 billion-parameter model, utilized 1,720,320 hours of NVIDIA A100-80G for training. The current minimum price for NVIDIA single A100-80G hardware (outside of data centers) is $2.21 per hour. Based on this baseline, it would have cost META at least $3.8 million to train the 70B parameter model.

Variable price

In the previous case of purchasing a service from OpenAI, charges were based on tokens. However, when building your own models, you’ll need to handle the hosting/serving of models, either in your data centers or on the cloud. While running models internally, costs are typically calculated based on hardware/software usage hours rather than tokens processed. Assuming you can procure the A100-80G at $2.21/hr and acquire the entire host (with 8 GPU cards), your base cost for hosting is $17.68/hr.

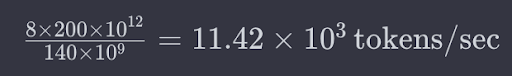

The maximum sequence length for inference depends on factors like batch size, compute and memory usage, model parallelism, and sequence length. Generally, the memory requirements for processing a prompt are about 2 times the number of parameters (in this case, 2 * 40 = 80). The compute requirement is a combination of prompt size (tokens) and the number of parameters. The NVIDIA A100-80G can handle 312 TFLOPs and has 2 TB/s of memory bandwidth. Assuming FLOPs utilization maxes out at 70%, we get 200 TFLOPs. The hosting cost is $17.68/hr, which translates to $0.0049/sec. The FLOPs requirement for LLaMA is 140 TFLOPs/token. Calculating token/sec yields:

This gives us $0.000429/1K tokens.

People/Skills

To develop a model akin to LLaMA 2, a team of highly skilled scientists and engineers is essential. META credits 67 people from their team for building all four models of LLaMA 2. Assuming that each model was built by 17 people for simplicity, it still constitutes a substantial team size. Other model creators, such as Bloomberg and TII, have built large models (50B and 40B Falcon models, respectively) with teams of fewer than 10 people. This is partly due to their use of managed service offerings like Amazon SageMaker, which simplifies infrastructure management and training orchestration. Assuming an average salary of $250,000/year for each person from META and considering a 3-month timeframe for building the model, the approximate people cost amounts to ~$1M.

Time-to-market

According to the META team, the LLaMA 2 model was trained between January 2023 and July 2023. On average, it takes 3-6 months to build and train such a large model. Opting to build a model, as opposed to buying a service, will extend your time-to-market.

In summary, building a custom foundation model similar to LLaMA 2, focusing on summarization and sentiment analysis of public companies, could incur around $3.8 million in fixed expenses, $4,000 in variable inference costs, and $1 million for staffing, totaling approximately $4.8 million. The advantage of constructing a powerful general-purpose model like LLaMA 2 is its ability to handle numerous additional tasks beyond the initial use cases, requiring only incremental variable costs for more inferences. However, periodic retraining of the model might be necessary to keep it current, incurring additional expenses. The significant upfront investment for developing a custom foundation model can be justified if the model’s versatility allows spreading fixed costs across many production applications.

Note: The above estimates do not account for any data processing requirements, as these can vary widely depending on each organization’s unique data and workflows.

Conclusion: The decision of whether to build an in-house foundation model, fine-tune a pre-trained model, or use an external API depends on various factors, including cost, staffing, risk tolerance, IP ownership, and available data. This article has outlined the investments required for both building and licensing foundation model services to fulfill specific tasks. However, the competition to develop improved foundation models is ongoing, with companies continuously creating larger, more accurate models with enhanced efficiency. When evaluating newly developed models, organizations must consider factors like open sourcing versus commercialization. Ultimately, leaders need to carefully assess the tradeoffs associated with building, buying, or subscribing to determine the best path forward for their foundation model requirements.